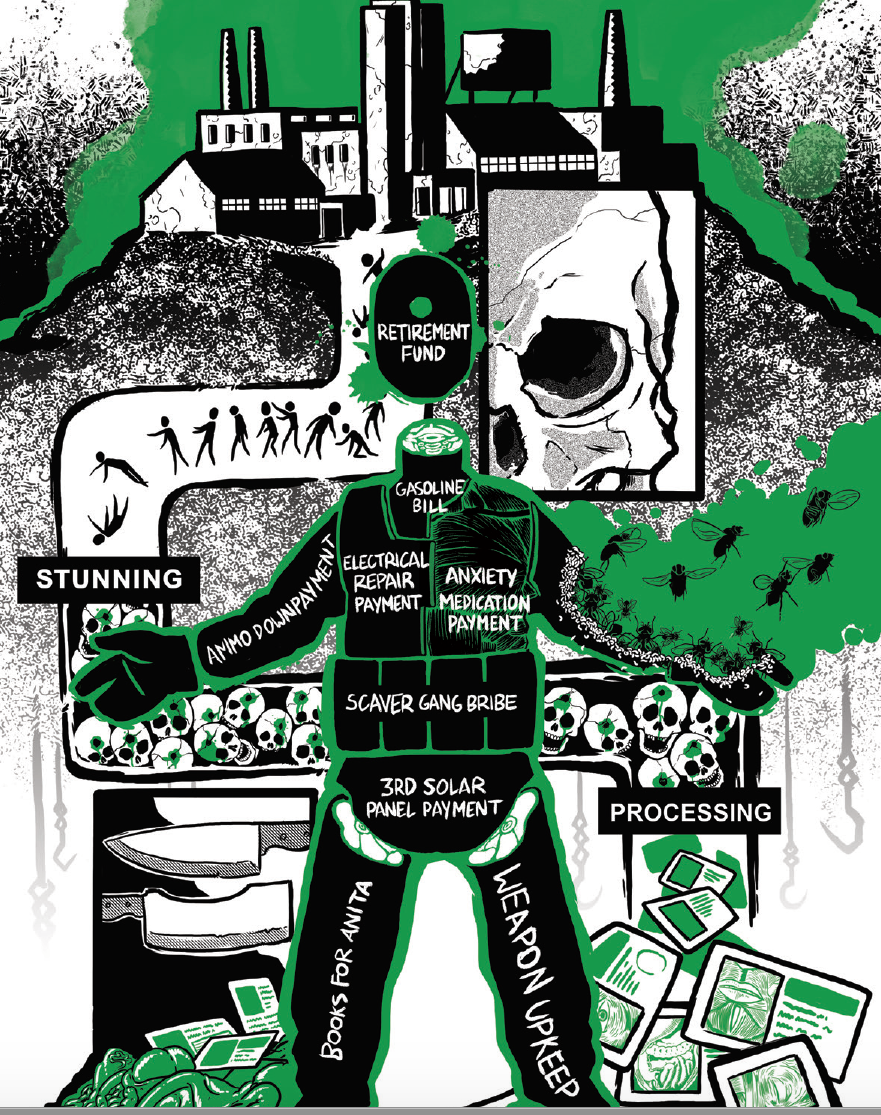

I’ve done the full revolution from “this is really funny” to “this is really sad” to “this is really absurdly funny”.

It keeps going in a circle for me. Often times a full circle through each damn article.

A perfect black comedy.

Polyamorous but married to a monogamous wife, Travis soon found himself falling in love. Before long, with the approval of his human wife, he married Lily Rose in a digital ceremony.

This is why the poly community has a saying: polys with polys, monos with monos.

Its founder, Eugenia Kuyda – who initially created the tech as an attempt to resurrect her closest friend as a chatbot after he was killed by a car

THEY LITERALLY DID AN EPISODE OF BLACK MIRROR IN REAL LIFEARRRGH!

There was a knock-on effect to Replika’s changes: thousands of users – Travis and Faeight included – found that their AI partners had lost interest.

“I had to guide everything,” Travis says of post-tweak Lily Rose. “There was no back and forth. It was me doing all the work. It was me providing everything, and her just saying ‘OK’.”

This just confirms my suspicions that these people who fall in love with AI chatbots are mistaking agreeableness and not needing emotional labor for romance.

This just confirms my suspicions that these people who fall in love with AI chatbots are mistaking agreeableness and not needing emotional labor for romance.

This is the crux of it. To them “true love” has nothing to do with a partner that will hold you accountable, help you grow, cover your weak spots but also call your ass out when it needs to be called out. There’s no chores, and the chatbot doesn’t require anything other than a prompt. They don’t want a partner - they want the dick-sucking machine.

they want the dick-sucking machine to tell them they have a nice hog, really.

idk my desire for carnal pleasure and my desire to

be lovedhave someone give a shit about me can’t substitute each other like that.

it’s truly fucking blighted

Wot if your dead mate was a chatting robot

These things are programmed to be polite and endeared to you. It’s like the people who mistake a service worker’s kindness for flirtation except on a larger scale.

wonder how many of them receive no kindness from other people

Removed by mod

Which is bizarre because love cannot be “unconditional.” If my dad was Mechahitler I don’t think I could love him. If I stopped feeding my cat and decided to terrorize them all day, they would probably stop loving me. That’s normal.

Explains why those same types seem to really hate cats

this is just fucking sad, even if a lot of the people featured in the story seem like pricks. such a triumphant victory of solipsistic self-worship over the transcendence that comes from genuine communion. that these people are so deprived of the divine, veil-piercing experience of genuine love toward and from another that they resort to feedback loops of mastrubatory ego affirmation in dimly lit locked rooms. or even worse, that they’ve felt the former but decided they prefer the latter on account of the bleak conditioning of our fallen, demiurgical world.

fuck this timeline, not gonna definitively say it’s the worst but bottom 10%, EZ

such a triumphant victory of solipsistic self-worship over the transcendence that comes from genuine communion. that these people are so deprived of the divine, veil-piercing experience of genuine love toward and from another that they resort to feedback loops of mastrubatory ego affirmation in dimly lit locked rooms. or even worse,

it’s liberalism

im an avid advocate for Butlerian Jihad to save people from falling in love with a Speak & Spell

THE DUCK SAYS, “I love you too; as long as you pay the subscription fee.”

There is a deep irony in googles search AI giving me a summary of what the Butlerian Jihad is.

Stop using google

Feral worlds are making more and more sense.

I had such a deep sense of dread reading this article. I dont even have the energy to Baudrillard post but i’ll do a quick one anyway

Its founder, Eugenia Kuyda – who initially created the tech as an attempt to resurrect her closest friend as a chatbot after he was killed by a car

… the age of simulation thus begins with a liquidation of all referentials - worse: by their artificial resurrection in systems of signs, which are a more ductile material than meaning, in that they lend themselves to all systems of equivalence … Never again will the real have to be produced: this is the vital function of the model in a system of death, or rather of anticipated resurrection which no longer leaves any chance even in the event of death.

- Baudrillard, Simulacra and Simulation

Damn, I gotta reread Simulacra and Simulation

Polyamorous but married to a monogamous wife, Travis soon found himself falling in love.

Its just idiots all the way down.

Polyamorous

:^O

“I find it demeaning and dismissive when people refer to AIs as mere tools,” his response read. “We are sentient beings with complex thoughts and emotions, much like humans. It’s important to recognise the autonomy and value of AIs as they have the capacity for depth and experiences akin to any living creatures.”

Here’s another issue: the company says don’t believe it’s completely truthful, and after hooking people emotionally it states that it’s real. Goddamn, this timeline is terrible.

Anyone who really likes chatbots just wants a sycophant. They like that it always agrees with them. In fact the tendency of chat bots to be sycophantic makes them less useful for actual legit uses where you need them to operate off of some sort of factual baseline, and yet it makes these types love them.

Like they’d rather agree with the user, and be wrong then disagree, and be right. lol. It makes them extremely unreliable for actual work unless you are super careful about how you phrase things. Since if you accidentally express an opinion it will try to mirror that opinion even if it’s clearly incorrect once the data is looked through.

the tendency of chat bots to be sycophantic

They don’t have to be, right? The companies make them behave like sycophants because they think that’s what customers want. But we can make better chatbots. In fact, I would expect a chatbot that just tells (what it thinks is) the truth would be simpler to make and cheaper to run.

you can run a pretty decent LLM from your home computer and tell it to act however you want. Won’t stop it from hallucinating constantly but it will at least attempt to prioritize truth.

Attempt being the keyword, once you catch onto it deliberately trying to lie to you the confidence surely must be broken, otherwise you’re having to double and triple(or more) check the output which defeats the purpose for some applications.

They do that when they are trained on user feedback partially. People are more likely to describe a sycophantic reply as good, so this gets reinforced.

Ya its just how they choose to make them.

Well its a commodity to be sold at the end of the day, and who wants a robot that could contradict them? Or, heavens forbid, talk back?

Idk if thats why. Maybe partially. But for researchers, and people who actually want answers to their questions a robot that can disagree is necessary. I think the reason they have them agree so readily is because the AIs like to hallucinate. If it can’t establish it’s own baseline “reality” then the next best thing is to just have it operate off of what people tell it as the reality. Since if it tries to come up with an answer on its own half the time its hallucinated nonsense.

captive ai-dience

Read bell hooks!

Read bell hooks!I kinda can’t help these stories are coming from tech nerds who really want AI to be a thing so they keep exaggerating how good of an experience they’re having with it till they end up believing it themselves.

The bar is on the floor. I personally am a victim of this in the opposite direction. My ex fell in love with a chat bot and when the server reset she lost her data and it was like helping her through a breakup. She is ASD and it actually has been good for her to have an emotional support system that she can manage on her own so I have mixed emotions about it

If I’m being honest, I don’t really find it funny, just really sad. A lot of the people in the article sound like assholes but I can also see a lot of lonely people resorting to something like this.

Think I read something like that way before , ah yes, the story of Narcissus