Daily reminder that LLMs do not process words as words but as tokens - basically number IDs. There is absolutely no room there for any kind of understanding to take place. The one thing these things have been optimised to do is output text that is statistically similar to other text

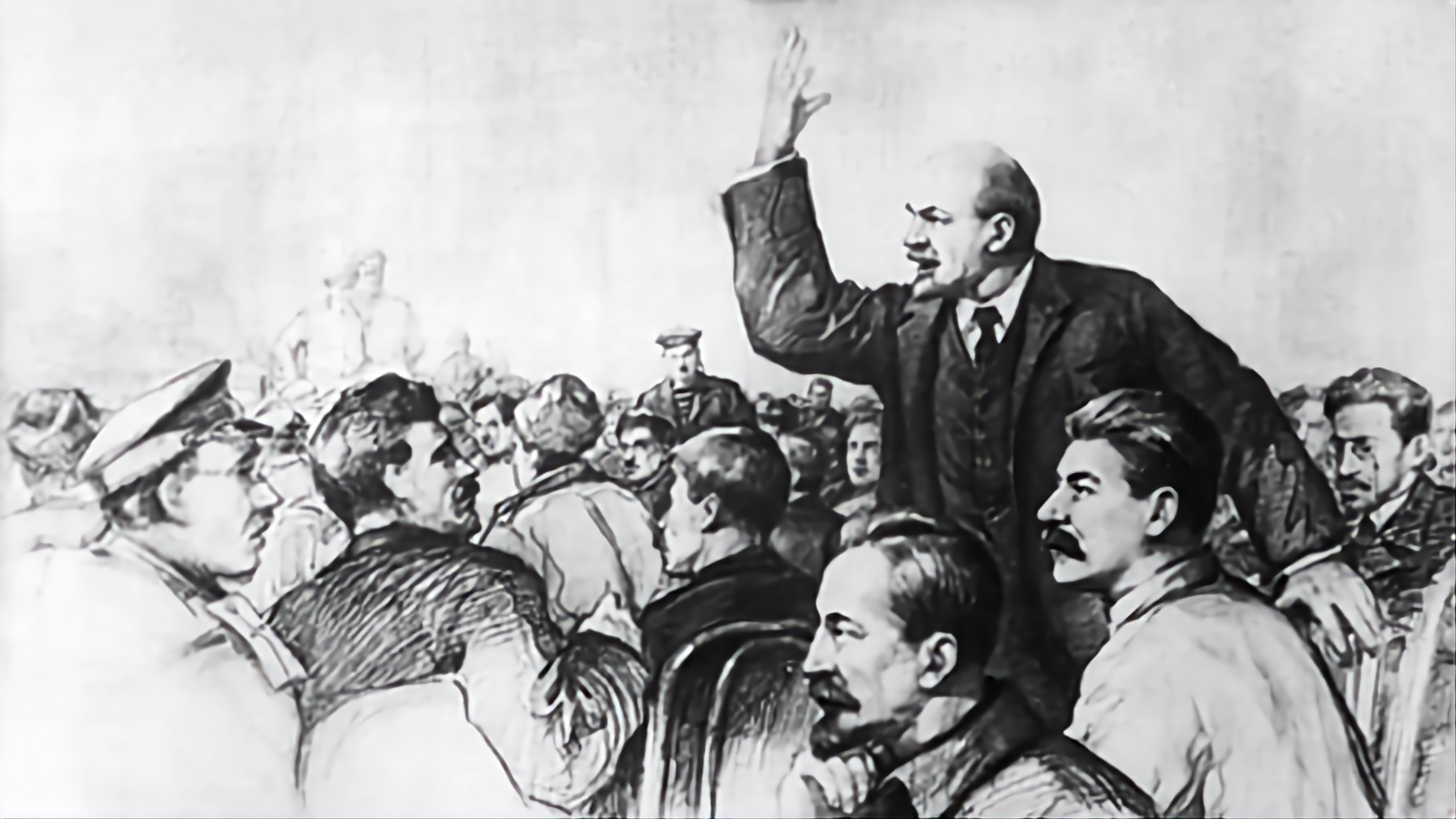

Fellas, I’m starting to think innovation is not some innate racial characteristic, but a product of the material conditions and political economy of states.